News

AI News and Accomplishments

May 1, 2025

Researchers at Rensselaer Polytechnic Institute (RPI) are tackling one of the most complex challenges in the world of quantum information – how to create reliable, scalable networks that can connect quantum systems over distances.

Researchers at Rensselaer Polytechnic Institute (RPI) are tackling one of the most complex challenges in the world of quantum information – how to create reliable, scalable networks that can connect quantum systems over distances.

March 24, 2025

Lung cancer is one of the most challenging diseases, making early diagnosis crucial for effective treatment. Fortunately, advancements in artificial intelligence (AI) are transforming lung cancer screening, improving both accuracy and efficiency.

Lung cancer is one of the most challenging diseases, making early diagnosis crucial for effective treatment. Fortunately, advancements in artificial intelligence (AI) are transforming lung cancer screening, improving both accuracy and efficiency.

March 3, 2025

Quantum computing is rapidly reshaping the landscape of digital security, and a team of researchers from the Rensselaer Cybersecurity Collaboratory (RCC) at Rensselaer Polytechnic Institute (RPI) is leading the charge.

Quantum computing is rapidly reshaping the landscape of digital security, and a team of researchers from the Rensselaer Cybersecurity Collaboratory (RCC) at Rensselaer Polytechnic Institute (RPI) is leading the charge.

March 3, 2025

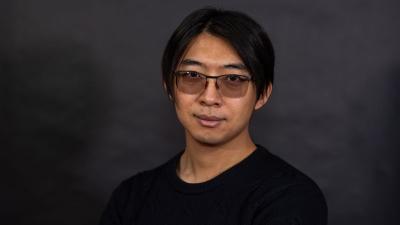

Rensselaer Polytechnic Institute (RPI) AI expert Jim Hendler, Ph.D., Tetherless World Senior Constellation Professor of Computer, Web, and Cognitive Science has received the 2025 AAAI Feigenbaum Prize, one of the most prestigious honors in the field of artificial intelligence (AI).

Rensselaer Polytechnic Institute (RPI) AI expert Jim Hendler, Ph.D., Tetherless World Senior Constellation Professor of Computer, Web, and Cognitive Science has received the 2025 AAAI Feigenbaum Prize, one of the most prestigious honors in the field of artificial intelligence (AI).

October 7, 2024

Rensselaer Polytechnic Institute President Martin A. Schmidt ’81, Ph.D., has been appointed to the CHIPS Industrial Advisory Committee (IAC). This group of leaders from industry, academia, federal laboratories, and other areas provides guidance to U.S.

Rensselaer Polytechnic Institute President Martin A. Schmidt ’81, Ph.D., has been appointed to the CHIPS Industrial Advisory Committee (IAC). This group of leaders from industry, academia, federal laboratories, and other areas provides guidance to U.S.

Rensselaer News Feed

Rensselaer Polytechnic Institute (RPI) is proud to welcome the second cohort of Hudson Valley Community College (HVCC) students to the Semiconductor Scholars Program, an initiative supported by NORDTECH DoD Microelectronics Hub.

Rensselaer Polytechnic Institute (RPI) is proud to welcome the second cohort of Hudson Valley Community College (HVCC) students to the Semiconductor Scholars Program, an initiative supported by NORDTECH DoD Microelectronics Hub.

Rensselaer Polytechnic Institute (RPI) is proud to announce that Pingkun Yan, Ph.D., associate professor of Biomedical Engineering at RPI, has been appointed chair of the IEEE Engineering in Medicine and Biology Society (EMBS) Technical Committee on Biomedical Imaging and Image Processing (BIIP).

Rensselaer Polytechnic Institute (RPI) is proud to announce that Pingkun Yan, Ph.D., associate professor of Biomedical Engineering at RPI, has been appointed chair of the IEEE Engineering in Medicine and Biology Society (EMBS) Technical Committee on Biomedical Imaging and Image Processing (BIIP).

Rensselaer Polytechnic Institute (RPI) has received a $10 million gift from Ajit Prabhu ’98, founder and CEO of Quest Global, to establish the Ajit Prabhu Catalyst Endowment and the Ajit Prabhu Catalyst Fund, both in support of the Office of Strategic Alliances and Translation (OSAT). Prabhu launched the fund, which will assist RPI student and faculty entrepreneurs.

Rensselaer Polytechnic Institute (RPI) has received a $10 million gift from Ajit Prabhu ’98, founder and CEO of Quest Global, to establish the Ajit Prabhu Catalyst Endowment and the Ajit Prabhu Catalyst Fund, both in support of the Office of Strategic Alliances and Translation (OSAT). Prabhu launched the fund, which will assist RPI student and faculty entrepreneurs.

Rensselaer Polytechnic Institute’s Georges Belfort, Ph.D., and Steven Cramer, Ph.D., both Institute Professors of Chemical and Biological Engineering, have won the 2025 Bernard M. Gordon Prize for Innovation in Engineering and Technology Education given by the National Academy of Engineering.

Rensselaer Polytechnic Institute’s Georges Belfort, Ph.D., and Steven Cramer, Ph.D., both Institute Professors of Chemical and Biological Engineering, have won the 2025 Bernard M. Gordon Prize for Innovation in Engineering and Technology Education given by the National Academy of Engineering.

Lung cancer is one of the most challenging diseases, making early diagnosis crucial for effective treatment. Fortunately, advancements in artificial intelligence (AI) are transforming lung cancer screening, improving both accuracy and efficiency.

Lung cancer is one of the most challenging diseases, making early diagnosis crucial for effective treatment. Fortunately, advancements in artificial intelligence (AI) are transforming lung cancer screening, improving both accuracy and efficiency.